I share Federico’s frustration over saving links. Every link may be a URL, but their endpoints can be wildly different. If like us, you save links to articles, videos, product information, and more, it’s hard to find a tool that handles every kind of link equally well.

That was the problem Federico set out to solve with Universal Clipper, an advanced shortcut that automatically detects the kind of link that’s passed to it, and saves it to a text file, which he accesses in Obsidian, although any text editor will work.

Universal Clipper, which Federico released yesterday as part of his Automation Academy series for Club MacStories Plus and Premier members, is one of his most ambitious shortcuts that draws on multiple third-party apps, services, and command line tools in an automation that works as a standalone shortcut or as a function that can send its results to another shortcut. As Federico explains:

I learned a lot in the process. As I’ve documented on MacStories and the Club lately, I’ve played around with various templates for Dataview queries in Obsidian; I’ve learnedhow to take advantage of the Mac’s Terminal and various CLI utilities to transcribe long YouTube videos and analyze them with Gemini 2.5; I’ve explored new ways to interact with web APIs in Shortcuts; and, most recently, I learned how to properly prompt GPT 4.1 with precise instructions. All of these techniques are coming together in Universal Clipper, my latest, Mac-only shortcut that combines macOS tools, Markdown, web APIs, and AI to clip any kind of webpage from any web browser and save it as a searchable Markdown document in Obsidian.

Although the shortcut may be complex, the best part of Federico’s post is how easy it is to follow. Along the way, you’ll learn a bunch of techniques and approaches to Shortcuts automation that you can adapt for your own shortcuts, too.

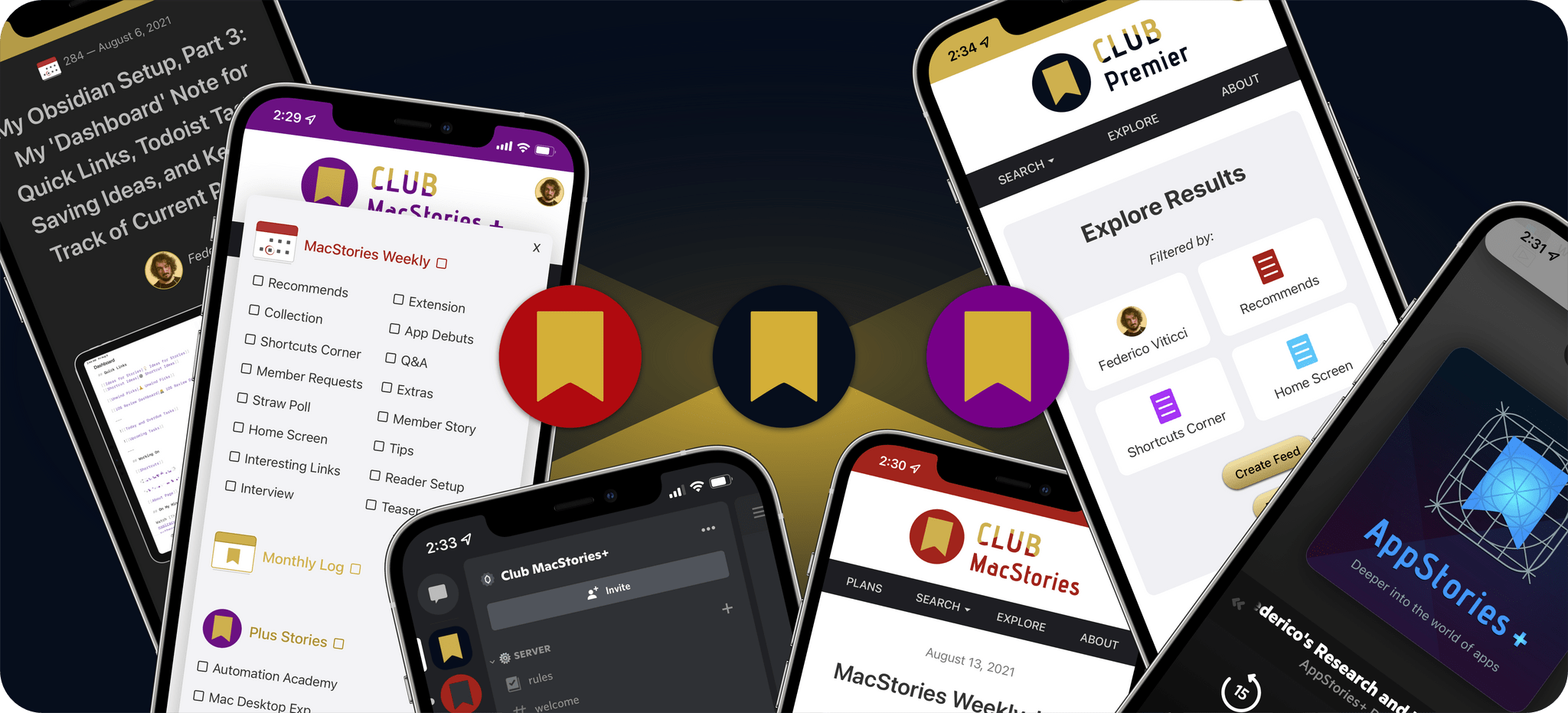

Automation Academy is just one of many perks that Club MacStories Plus and Club Premier members enjoy including:

- Weekly and monthly newsletters

- A sophisticated web app with search and filtering tools to navigate eight years of content

- Customizable RSS feeds

- Bonus columns

- An early and ad-free version of our Internet culture and media podcast, MacStories Unwind

- A vibrant Discord community of smart app and automation fans who trade a wealth of tips and discoveries every day

- Live Discord audio events after Apple events and at other times of the year

On top of that, Club Premier members get AppStories+, an extended, ad-free version of our flagship podcast that we deliver early every week in high-bitrate audio.

Use the buttons below to learn more and sign up for Club MacStories+ or Club Premier.

Join Club MacStories+:

Join Club Premier: